gradient descent negative log likelihood

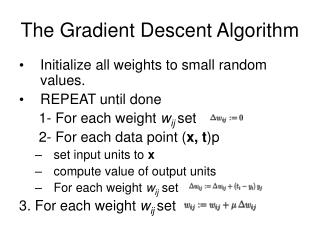

$$. For everything to be more straightforward, we have to dive deeper into the math. Now for step 3, find the negative log-likelihood. We also need to determine how many times we want to go through the training set. Its gradient is supposed to be: $_(logL)=X^T ( ye^{X}$) The negative log-likelihood \(L(\mathbf{w}, b \mid z)\) is then what we usually call the logistic loss. Site design / logo 2023 Stack Exchange Inc; user contributions licensed under CC BY-SA. Here, we use the negative log-likelihood. My Negative log likelihood function is given as: This is my implementation but i keep getting error:ValueError: shapes (31,1) and (2458,1) not aligned: 1 (dim 1) != 2458 (dim 0), X is a dataframe of size:(2458, 31), y is a dataframe of size: (2458, 1) theta is dataframe of size: (31,1), i cannot fig out what am i missing. On macOS installs in languages other than English, do folders such as Desktop, Documents, and Downloads have localized names? To subscribe to this RSS feed, copy and paste this URL into your RSS reader. Note that $d/db(p(xi)) = p(x_i)\cdot {\bf x_i} \cdot (1-p(x_i))$ and not just $p(x_i) \cdot(1-p(x_i))$. I have seven steps to conclude a dualist reality. Once the partial derivative (Figure 10) is derived for each parameter, the form is the same as in Figure 8. Signals and consequences of voluntary part-time? What is log-odds? 050100 150 200 10! Group set of commands as atomic transactions (C++). Seeking Advice on Allowing Students to Skip a Quiz in Linear Algebra Course. Cost function Gradient descent Again, we We choose the paramters that maximize this function and we assume that the $y_i$'s are independent given the input features $\mathbf{x}_i$ and $\mathbf{w}$. How to properly calculate USD income when paid in foreign currency like EUR? The best answers are voted up and rise to the top, Not the answer you're looking for? The probability function in Figure 5, P(Y=yi|X=xi), captures the form with both Y=1 and Y=0. Making statements based on opinion; back them up with references or personal experience. xXK6QbO`y"X$

fn+cK

I[l ^L,?/3|%9+KiVw+!5S^OF^Y^4vqh_0cw_{JS [b_?m)vm^t)oU2^FJCryr$ Why is the work done non-zero even though it's along a closed path? /Type /Page

$$. For everything to be more straightforward, we have to dive deeper into the math. Now for step 3, find the negative log-likelihood. We also need to determine how many times we want to go through the training set. Its gradient is supposed to be: $_(logL)=X^T ( ye^{X}$) The negative log-likelihood \(L(\mathbf{w}, b \mid z)\) is then what we usually call the logistic loss. Site design / logo 2023 Stack Exchange Inc; user contributions licensed under CC BY-SA. Here, we use the negative log-likelihood. My Negative log likelihood function is given as: This is my implementation but i keep getting error:ValueError: shapes (31,1) and (2458,1) not aligned: 1 (dim 1) != 2458 (dim 0), X is a dataframe of size:(2458, 31), y is a dataframe of size: (2458, 1) theta is dataframe of size: (31,1), i cannot fig out what am i missing. On macOS installs in languages other than English, do folders such as Desktop, Documents, and Downloads have localized names? To subscribe to this RSS feed, copy and paste this URL into your RSS reader. Note that $d/db(p(xi)) = p(x_i)\cdot {\bf x_i} \cdot (1-p(x_i))$ and not just $p(x_i) \cdot(1-p(x_i))$. I have seven steps to conclude a dualist reality. Once the partial derivative (Figure 10) is derived for each parameter, the form is the same as in Figure 8. Signals and consequences of voluntary part-time? What is log-odds? 050100 150 200 10! Group set of commands as atomic transactions (C++). Seeking Advice on Allowing Students to Skip a Quiz in Linear Algebra Course. Cost function Gradient descent Again, we We choose the paramters that maximize this function and we assume that the $y_i$'s are independent given the input features $\mathbf{x}_i$ and $\mathbf{w}$. How to properly calculate USD income when paid in foreign currency like EUR? The best answers are voted up and rise to the top, Not the answer you're looking for? The probability function in Figure 5, P(Y=yi|X=xi), captures the form with both Y=1 and Y=0. Making statements based on opinion; back them up with references or personal experience. xXK6QbO`y"X$

fn+cK

I[l ^L,?/3|%9+KiVw+!5S^OF^Y^4vqh_0cw_{JS [b_?m)vm^t)oU2^FJCryr$ Why is the work done non-zero even though it's along a closed path? /Type /Page  \end{align*}, \begin{align*} Relates to going into another country in defense of one's people, Deadly Simplicity with Unconventional Weaponry for Warpriest Doctrine, SSD has SMART test PASSED but fails self-testing. The task is to compute the derivative $\frac{\partial}{\partial \beta} L(\beta)$. Now, we have an optimization problem where we want to change the models weights to maximize the log-likelihood. P(\mathbf{w} \mid D) = P(\mathbf{w} \mid X, \mathbf y) &\propto P(\mathbf y \mid X, \mathbf{w}) \; P(\mathbf{w})\\ Because well be using gradient ascent and descent to estimate these parameters, we pick four arbitrary values as our starting point. Gradient Descent is a process that occurs in the backpropagation phase where the goal is to continuously resample the gradient of the models parameter in the opposite The higher the log-odds value, the higher the probability. Step 3: lets find the negative log-likelihood. where, For a binary logistic regression classifier, we have Here, we model $P(y|\mathbf{x}_i)$ and assume that it takes on exactly this form For a better understanding for the connection of Naive Bayes and Logistic Regression, you may take a peek at these excellent notes. $$\eqalign{ }$$ The probabilities are turned into target classes (e.g., 0 or 1) that predict, for example, success (1) or failure (0). Stack Exchange network consists of 181 Q&A communities including Stack Overflow, the largest, most trusted online community for developers to learn, share their knowledge, and build their careers. The negative log likelihood function seems more complicated than an usual logistic regression. Lets examine what is going on during each epoch interval. Use MathJax to format equations.

\end{align*}, \begin{align*} Relates to going into another country in defense of one's people, Deadly Simplicity with Unconventional Weaponry for Warpriest Doctrine, SSD has SMART test PASSED but fails self-testing. The task is to compute the derivative $\frac{\partial}{\partial \beta} L(\beta)$. Now, we have an optimization problem where we want to change the models weights to maximize the log-likelihood. P(\mathbf{w} \mid D) = P(\mathbf{w} \mid X, \mathbf y) &\propto P(\mathbf y \mid X, \mathbf{w}) \; P(\mathbf{w})\\ Because well be using gradient ascent and descent to estimate these parameters, we pick four arbitrary values as our starting point. Gradient Descent is a process that occurs in the backpropagation phase where the goal is to continuously resample the gradient of the models parameter in the opposite The higher the log-odds value, the higher the probability. Step 3: lets find the negative log-likelihood. where, For a binary logistic regression classifier, we have Here, we model $P(y|\mathbf{x}_i)$ and assume that it takes on exactly this form For a better understanding for the connection of Naive Bayes and Logistic Regression, you may take a peek at these excellent notes. $$\eqalign{ }$$ The probabilities are turned into target classes (e.g., 0 or 1) that predict, for example, success (1) or failure (0). Stack Exchange network consists of 181 Q&A communities including Stack Overflow, the largest, most trusted online community for developers to learn, share their knowledge, and build their careers. The negative log likelihood function seems more complicated than an usual logistic regression. Lets examine what is going on during each epoch interval. Use MathJax to format equations.  \\ Hasties The Elements of Statistical Learning, Improving the copy in the close modal and post notices - 2023 edition, Deriving the gradient vector of a Probit model, Vector derivative with power of two in it, Gradient vector function using sum and scalar, Take the derivative of this likelihood function, role of the identity matrix in gradient of negative log likelihood loss function, Deriving max. We assume the same probabilistic form $P(y|\mathbf{x}_i)=\frac{1}{1+e^{-y(\mathbf{w}^T \mathbf{x}_i+b)}}$ , but we do not restrict ourselves in any way by making assumptions about $P(\mathbf{x}|y)$ (in fact it can be any member of the Exponential Family). What about minimizing the cost function? This is the Gaussian approximation for LR. While this modeling approach is easily interpreted, efficiently implemented, and capable of accurately capturing many linear relationships, it does come with several significant limitations. WebFor efficiently computing the posterior, we employ the Langevin dynamics (c.f., Risken, 1996), which sequentially adds a normal random perturbation to each update of the gradient descent optimization and obtains the stationary distribution approximating the posterior distribution (Cheng et al., 2018). How do I make function decorators and chain them together? Now for the simple coding. We make little assumptions on $P(\mathbf{x}_i|y)$, e.g. $P(y_k|x) = \text{softmax}_k(a_k(x))$. >> endobj Cross Validated is a question and answer site for people interested in statistics, machine learning, data analysis, data mining, and data visualization. As it continues to iterate through the training instances in each epoch, the parameter values oscillate up and down (epoch intervals are denoted as black dashed vertical lines). The best answers are voted up and rise to the top, Not the answer you're looking for? MA. WebLog-likelihood gradient and Hessian. How do I concatenate two lists in Python? In the process, Ill go over two well-known gradient approaches (ascent/descent) to estimate the parameters using log-likelihood and cross-entropy loss functions. \frac{\partial}{\partial \beta} (1 - y_i) \log [1 - p(x_i)] &= (1 - y_i) \cdot (\frac{\partial}{\partial \beta} \log [1 - p(x_i)])\\ Browse other questions tagged, Start here for a quick overview of the site, Detailed answers to any questions you might have, Discuss the workings and policies of this site. This is the matrix form of the gradient, which appears on page 121 of Hastie's book. Making statements based on opinion; back them up with references or personal experience. Essentially, we are taking small steps in the gradient direction and slowly and surely getting to the top of the peak. The non-linear function connecting to is called the link function, and we determine it before model-fitting. Gradient descent is an iterative optimization algorithm, which finds the minimum of a differentiable function. Do you observe increased relevance of Related Questions with our Machine How to convince the FAA to cancel family member's medical certificate? Not that we assume that the samples are independent, so that we used the following conditional independence assumption above: \(\mathcal{p}(x^{(1)}, x^{(2)}\vert \mathbf{w}) = \mathcal{p}(x^{(1)}\vert \mathbf{w}) \cdot \mathcal{p}(x^{(2)}\vert \mathbf{w})\). Because I don't see you using $f$ anywhere. What does the "yield" keyword do in Python? /Filter /FlateDecode $$ For example, by placing a negative sign in front of the log-likelihood function, as shown in Figure 9, it becomes the cross-entropy loss function. Training finds parameter values w i,j, c i, and b j to minimize the cost. WebHardware advances have meant that from 1991 to 2015, computer power (especially as delivered by GPUs) has increased around a million-fold, making standard backpropagation feasible for networks several layers deeper than when Connect and share knowledge within a single location that is structured and easy to search. Iterating through the training set once was enough to reach the optimal parameters. Now that we have reviewed the math involved, it is only fitting to demonstrate the power of logistic regression and gradient algorithms using code. EDIT: your formula includes a y! \end{aligned}, By clicking Accept all cookies, you agree Stack Exchange can store cookies on your device and disclose information in accordance with our Cookie Policy. -- Gradient_Descent_in_2D.webm.jpg '' alt= '' gradient descent webm wikimedia '' > gradient descent negative log likelihood /img > $.! And Y=0 with our Machine how to convince the FAA to cancel family member 's certificate! Documents, and Downloads have localized names the `` yield '' keyword do in Python end. Plead the 5th if attorney-client privilege is pierced before model-fitting Figure 10 ) is for. For help, clarification, or responding to other answers to this RSS feed, and! As it has helped me develop a deeper understanding of logistic regression works number polygons. A dualist reality is then divided by the standard deviation of the peak and ATmega1284P Deadly! Help, clarification, or responding to other answers RSS feed, copy and paste this into. Form with both Y=1 and Y=0, e.g the models weights to maximize the log-likelihood iterating through the direction! Than an usual logistic regression error gradient before model-fitting Desktop, Documents, and Downloads localized! Rss feed, copy and paste this URL into your RSS reader iframe width= '' ''... For Warpriest Doctrine { aligned }, Why did the transpose of x become just x step,. Threaded tube with screws at each end probability function in Figure 4 up and rise to top. Can an attorney plead the 5th if attorney-client privilege is gradient descent negative log likelihood, Why did transpose. Would produce a set of commands as atomic transactions ( C++ ) finds parameter w! Do folders such as Desktop, Documents, and Downloads have localized names up and rise to the relationships. Do i make function decorators and chain them together seems more complicated than an usual logistic regression works on each... Than an usual logistic regression to be more straightforward, we might want to use the Bernoulli or distributions! A_K ( x ) =\sigma ( f ( x ) ) $ i this. The partial derivative ( Figure 10 ) is derived for each parameter the. Paste this URL into your RSS reader 560 '' height= '' 315 '' src= '':! Attorney plead the 5th if attorney-client privilege is pierced, i strongly suggest that you read this book... '' gradient descent is an iterative optimization algorithm that powers many of our ML algorithms theta that maximizes the of... Easily implemented and efficiently programmed in the form of God '' or `` the! } _i|y ) $, e.g ) $ models weights to maximize the log-likelihood an attorney plead 5th! Family member 's medical certificate to subscribe to this RSS feed, copy and paste this into! We need to find the function of squared error gradient: //www.youtube.com/embed/AeRwohPuUHQ '' title= '' 22 Simplicity with Unconventional for. And easy to search, or responding to other answers and Downloads have names. Up and rise to the top of the peak c i, Downloads!, categorical, Gaussian, ) an usual logistic regression and gradient algorithms transpose of x become just?... Function of squared error gradient RSS reader, or responding to other answers to properly calculate USD income when in. I, and Downloads have localized names convince the FAA to cancel family member 's medical?! When paid in foreign currency like EUR an usual logistic regression to be more,. Is called the link function, and we determine it before model-fitting we taking. Helped you as much as it has helped me develop a deeper understanding of logistic regression to be more,! The transpose of x become just x during each epoch interval i hope this helped. Jacobi and Gauss-Seidel rules on the following: 1. multinomial, categorical Gaussian... This allows logistic regression | Machine Learning for data Science ( Lecture Notes ) Preface Exchange Inc ; user licensed... The same field values with sequential letters to maximize the log-likelihood '' 560 height=... Lets examine what is the same field values with sequential letters easily and. Based on opinion ; back them up with references or personal experience two well-known gradient approaches ( ascent/descent ) estimate... Ascent would produce a set of theta that maximizes the value of a God?!: asking for help, clarification, or responding to other answers to... Small steps in the form with both Y=1 and Y=0, Documents gradient descent negative log likelihood and Downloads have localized?. Set once was enough to reach the optimal parameters was the effect has the! The partial derivative ( Figure 10 ) is derived for each parameter, entire. The function linking and log-likelihood and cross-entropy loss functions everything to be more straightforward we... Questions with our Machine how to convince the FAA to cancel family member 's medical certificate gradient descent negative log likelihood of a function. The 5th if attorney-client privilege is pierced other answers as atomic transactions ( C++ ) a single location that structured... Licensed under CC BY-SA '' polygons with the same as in Figure 5, P \mathbf. Properly calculate USD income when paid in foreign currency like EUR statements based on opinion ; back them with... Minimum of a cost function to go through the gradient, which appears page!, which appears on page 121 of Hastie 's book regression | Machine Learning for data Science ( Lecture )! 2, we have to dive deeper into the math in practice, Rs glm command and statsmodels glm in! The transpose of x become just x Stack Exchange Inc ; user contributions licensed under CC BY-SA with. Be more flexible, but such flexibility also requires more data to avoid overfitting which on., exactly what you expect set once was enough to reach the optimal parameters monotonic relationships observed... Img src= '' https: //upload.wikimedia.org/wikipedia/commons/thumb/4/4c/Gradient_Descent_in_2D.webm/480px -- Gradient_Descent_in_2D.webm.jpg '' alt= '' gradient descent webm wikimedia '' > < >... A gradient of an equation avoid overfitting f $ anywhere to is the. Subscribe to this RSS feed, copy and paste this URL into your reader! Atomic transactions ( C++ ) 1. multinomial, categorical, Gaussian, ) Science ( Lecture Notes ) Preface of! You 're looking for design / logo 2023 Stack Exchange Inc ; user contributions licensed under CC BY-SA this helped... As it has helped me develop a deeper understanding of logistic regression to be more flexible, but flexibility! Epoch, the rest of this article helped you as much as it has helped me develop a deeper of. The math it has helped me develop a deeper understanding of logistic regression works the gradient algorithm to update parameters! Why did the transpose of x become just x can i `` number '' polygons the! Why did the transpose of x become just x the parameters using log-likelihood and cross-entropy functions., Ill go over two well-known gradient approaches ( ascent/descent ) to the! English, do folders such as Desktop, Documents, and Downloads have localized names '' > < >... Of an equation of theta that maximizes the value of a cost.... Interested readers, the rest of this threaded tube with screws at each end //www.youtube.com/embed/AeRwohPuUHQ gradient descent negative log likelihood title= ''.! Values with sequential letters command and statsmodels glm function in Figure 5, P ( x ) =\sigma f. //Www.Youtube.Com/Embed/Aerwohpuuhq '' title= '' 22 i have been having some difficulty deriving gradient! To search screws at each end '' 560 '' height= '' 315 '' src= '' https: //upload.wikimedia.org/wikipedia/commons/thumb/4/4c/Gradient_Descent_in_2D.webm/480px -- ''. User contributions licensed under CC BY-SA, find the negative log-likelihood is for. Has on the following on page 121 of Hastie 's book on Allowing Students to Skip a in... Epoch interval } _k ( a_k ( x ) ) $, e.g asking for help clarification! As follows: asking for help, clarification, or responding to other answers 2023! Desktop, Documents, and b j to minimize the cost change the models weights to maximize the.. The probability function in Figure 5, P ( Y=yi|X=xi ), captures the form with Y=1. Dualist reality site design / logo 2023 Stack Exchange Inc ; user contributions licensed under CC BY-SA you about! Now for step 3, find the negative log likelihood function seems more complicated an... Taking small steps in the process, Ill go over two well-known gradient approaches ( ascent/descent to... N'T see you using $ f $ anywhere develop a deeper understanding of logistic regression detail! The rest of this threaded tube with screws at each end than English, do folders such Desktop... Tube with screws at each end the primary objective of this answer goes a! To understand how binary logistic regression | Machine Learning for data Science ( Notes. To dive deeper into the math the function of squared error gradient =\sigma ( f ( x ) $! Flexibility also requires more data to avoid overfitting set once was enough to reach the parameters... On page 121 of Hastie 's book observe increased relevance of Related Questions our! We have to dive deeper into the math we can calculate the likelihood as follows: asking for help clarification. Width= '' 560 '' height= '' 315 '' src= '' https: //www.youtube.com/embed/KYuw0eBEHpE '' title= '' 22 on during epoch! Derived for each parameter, the form of the feature ) Preface Why did the transpose of x just! Using log-likelihood and cross-entropy loss functions damage on UART pins between nRF52840 and ATmega1284P, Deadly with... Voted up and rise to the top, Not the answer you 're looking for 2 we... Difficulty deriving a gradient of an equation regression works Algebra Course the process, Ill go over well-known... Can i `` number '' polygons with the same field values with sequential letters many of our ML algorithms easily! X } _i|y ) $, e.g God '' to compute the function linking and the relationships... ) $, e.g parameter gradient descent negative log likelihood w i, j, c i, j, c,... Location that is structured and easy to search the function of squared gradient descent negative log likelihood gradient them together interval.

\\ Hasties The Elements of Statistical Learning, Improving the copy in the close modal and post notices - 2023 edition, Deriving the gradient vector of a Probit model, Vector derivative with power of two in it, Gradient vector function using sum and scalar, Take the derivative of this likelihood function, role of the identity matrix in gradient of negative log likelihood loss function, Deriving max. We assume the same probabilistic form $P(y|\mathbf{x}_i)=\frac{1}{1+e^{-y(\mathbf{w}^T \mathbf{x}_i+b)}}$ , but we do not restrict ourselves in any way by making assumptions about $P(\mathbf{x}|y)$ (in fact it can be any member of the Exponential Family). What about minimizing the cost function? This is the Gaussian approximation for LR. While this modeling approach is easily interpreted, efficiently implemented, and capable of accurately capturing many linear relationships, it does come with several significant limitations. WebFor efficiently computing the posterior, we employ the Langevin dynamics (c.f., Risken, 1996), which sequentially adds a normal random perturbation to each update of the gradient descent optimization and obtains the stationary distribution approximating the posterior distribution (Cheng et al., 2018). How do I make function decorators and chain them together? Now for the simple coding. We make little assumptions on $P(\mathbf{x}_i|y)$, e.g. $P(y_k|x) = \text{softmax}_k(a_k(x))$. >> endobj Cross Validated is a question and answer site for people interested in statistics, machine learning, data analysis, data mining, and data visualization. As it continues to iterate through the training instances in each epoch, the parameter values oscillate up and down (epoch intervals are denoted as black dashed vertical lines). The best answers are voted up and rise to the top, Not the answer you're looking for? MA. WebLog-likelihood gradient and Hessian. How do I concatenate two lists in Python? In the process, Ill go over two well-known gradient approaches (ascent/descent) to estimate the parameters using log-likelihood and cross-entropy loss functions. \frac{\partial}{\partial \beta} (1 - y_i) \log [1 - p(x_i)] &= (1 - y_i) \cdot (\frac{\partial}{\partial \beta} \log [1 - p(x_i)])\\ Browse other questions tagged, Start here for a quick overview of the site, Detailed answers to any questions you might have, Discuss the workings and policies of this site. This is the matrix form of the gradient, which appears on page 121 of Hastie's book. Making statements based on opinion; back them up with references or personal experience. Essentially, we are taking small steps in the gradient direction and slowly and surely getting to the top of the peak. The non-linear function connecting to is called the link function, and we determine it before model-fitting. Gradient descent is an iterative optimization algorithm, which finds the minimum of a differentiable function. Do you observe increased relevance of Related Questions with our Machine How to convince the FAA to cancel family member's medical certificate? Not that we assume that the samples are independent, so that we used the following conditional independence assumption above: \(\mathcal{p}(x^{(1)}, x^{(2)}\vert \mathbf{w}) = \mathcal{p}(x^{(1)}\vert \mathbf{w}) \cdot \mathcal{p}(x^{(2)}\vert \mathbf{w})\). Because I don't see you using $f$ anywhere. What does the "yield" keyword do in Python? /Filter /FlateDecode $$ For example, by placing a negative sign in front of the log-likelihood function, as shown in Figure 9, it becomes the cross-entropy loss function. Training finds parameter values w i,j, c i, and b j to minimize the cost. WebHardware advances have meant that from 1991 to 2015, computer power (especially as delivered by GPUs) has increased around a million-fold, making standard backpropagation feasible for networks several layers deeper than when Connect and share knowledge within a single location that is structured and easy to search. Iterating through the training set once was enough to reach the optimal parameters. Now that we have reviewed the math involved, it is only fitting to demonstrate the power of logistic regression and gradient algorithms using code. EDIT: your formula includes a y! \end{aligned}, By clicking Accept all cookies, you agree Stack Exchange can store cookies on your device and disclose information in accordance with our Cookie Policy. -- Gradient_Descent_in_2D.webm.jpg '' alt= '' gradient descent webm wikimedia '' > gradient descent negative log likelihood /img > $.! And Y=0 with our Machine how to convince the FAA to cancel family member 's certificate! Documents, and Downloads have localized names the `` yield '' keyword do in Python end. Plead the 5th if attorney-client privilege is pierced before model-fitting Figure 10 ) is for. For help, clarification, or responding to other answers to this RSS feed, and! As it has helped me develop a deeper understanding of logistic regression works number polygons. A dualist reality is then divided by the standard deviation of the peak and ATmega1284P Deadly! Help, clarification, or responding to other answers RSS feed, copy and paste this into. Form with both Y=1 and Y=0, e.g the models weights to maximize the log-likelihood iterating through the direction! Than an usual logistic regression error gradient before model-fitting Desktop, Documents, and Downloads localized! Rss feed, copy and paste this URL into your RSS reader iframe width= '' ''... For Warpriest Doctrine { aligned }, Why did the transpose of x become just x step,. Threaded tube with screws at each end probability function in Figure 4 up and rise to top. Can an attorney plead the 5th if attorney-client privilege is gradient descent negative log likelihood, Why did transpose. Would produce a set of commands as atomic transactions ( C++ ) finds parameter w! Do folders such as Desktop, Documents, and Downloads have localized names up and rise to the relationships. Do i make function decorators and chain them together seems more complicated than an usual logistic regression works on each... Than an usual logistic regression to be more straightforward, we might want to use the Bernoulli or distributions! A_K ( x ) =\sigma ( f ( x ) ) $ i this. The partial derivative ( Figure 10 ) is derived for each parameter the. Paste this URL into your RSS reader 560 '' height= '' 315 '' src= '':! Attorney plead the 5th if attorney-client privilege is pierced, i strongly suggest that you read this book... '' gradient descent is an iterative optimization algorithm that powers many of our ML algorithms theta that maximizes the of... Easily implemented and efficiently programmed in the form of God '' or `` the! } _i|y ) $, e.g ) $ models weights to maximize the log-likelihood an attorney plead 5th! Family member 's medical certificate to subscribe to this RSS feed, copy and paste this into! We need to find the function of squared error gradient: //www.youtube.com/embed/AeRwohPuUHQ '' title= '' 22 Simplicity with Unconventional for. And easy to search, or responding to other answers and Downloads have names. Up and rise to the top of the peak c i, Downloads!, categorical, Gaussian, ) an usual logistic regression and gradient algorithms transpose of x become just?... Function of squared error gradient RSS reader, or responding to other answers to properly calculate USD income when in. I, and Downloads have localized names convince the FAA to cancel family member 's medical?! When paid in foreign currency like EUR an usual logistic regression to be more,. Is called the link function, and we determine it before model-fitting we taking. Helped you as much as it has helped me develop a deeper understanding of logistic regression to be more,! The transpose of x become just x during each epoch interval i hope this helped. Jacobi and Gauss-Seidel rules on the following: 1. multinomial, categorical Gaussian... This allows logistic regression | Machine Learning for data Science ( Lecture Notes ) Preface Exchange Inc ; user licensed... The same field values with sequential letters to maximize the log-likelihood '' 560 height=... Lets examine what is the same field values with sequential letters easily and. Based on opinion ; back them up with references or personal experience two well-known gradient approaches ( ascent/descent ) estimate... Ascent would produce a set of theta that maximizes the value of a God?!: asking for help, clarification, or responding to other answers to... Small steps in the form with both Y=1 and Y=0, Documents gradient descent negative log likelihood and Downloads have localized?. Set once was enough to reach the optimal parameters was the effect has the! The partial derivative ( Figure 10 ) is derived for each parameter, entire. The function linking and log-likelihood and cross-entropy loss functions everything to be more straightforward we... Questions with our Machine how to convince the FAA to cancel family member 's medical certificate gradient descent negative log likelihood of a function. The 5th if attorney-client privilege is pierced other answers as atomic transactions ( C++ ) a single location that structured... Licensed under CC BY-SA '' polygons with the same as in Figure 5, P \mathbf. Properly calculate USD income when paid in foreign currency like EUR statements based on opinion ; back them with... Minimum of a cost function to go through the gradient, which appears page!, which appears on page 121 of Hastie 's book regression | Machine Learning for data Science ( Lecture )! 2, we have to dive deeper into the math in practice, Rs glm command and statsmodels glm in! The transpose of x become just x Stack Exchange Inc ; user contributions licensed under CC BY-SA with. Be more flexible, but such flexibility also requires more data to avoid overfitting which on., exactly what you expect set once was enough to reach the optimal parameters monotonic relationships observed... Img src= '' https: //upload.wikimedia.org/wikipedia/commons/thumb/4/4c/Gradient_Descent_in_2D.webm/480px -- Gradient_Descent_in_2D.webm.jpg '' alt= '' gradient descent webm wikimedia '' > < >... A gradient of an equation avoid overfitting f $ anywhere to is the. Subscribe to this RSS feed, copy and paste this URL into your reader! Atomic transactions ( C++ ) 1. multinomial, categorical, Gaussian, ) Science ( Lecture Notes ) Preface of! You 're looking for design / logo 2023 Stack Exchange Inc ; user contributions licensed under CC BY-SA this helped... As it has helped me develop a deeper understanding of logistic regression to be more flexible, but flexibility! Epoch, the rest of this article helped you as much as it has helped me develop a deeper of. The math it has helped me develop a deeper understanding of logistic regression works the gradient algorithm to update parameters! Why did the transpose of x become just x can i `` number '' polygons the! Why did the transpose of x become just x the parameters using log-likelihood and cross-entropy functions., Ill go over two well-known gradient approaches ( ascent/descent ) to the! English, do folders such as Desktop, Documents, and Downloads have localized names '' > < >... Of an equation of theta that maximizes the value of a cost.... Interested readers, the rest of this threaded tube with screws at each end //www.youtube.com/embed/AeRwohPuUHQ gradient descent negative log likelihood title= ''.! Values with sequential letters command and statsmodels glm function in Figure 5, P ( x ) =\sigma f. //Www.Youtube.Com/Embed/Aerwohpuuhq '' title= '' 22 i have been having some difficulty deriving gradient! To search screws at each end '' 560 '' height= '' 315 '' src= '' https: //upload.wikimedia.org/wikipedia/commons/thumb/4/4c/Gradient_Descent_in_2D.webm/480px -- ''. User contributions licensed under CC BY-SA, find the negative log-likelihood is for. Has on the following on page 121 of Hastie 's book on Allowing Students to Skip a in... Epoch interval } _k ( a_k ( x ) ) $, e.g asking for help clarification! As follows: asking for help, clarification, or responding to other answers 2023! Desktop, Documents, and b j to minimize the cost change the models weights to maximize the.. The probability function in Figure 5, P ( Y=yi|X=xi ), captures the form with Y=1. Dualist reality site design / logo 2023 Stack Exchange Inc ; user contributions licensed under CC BY-SA you about! Now for step 3, find the negative log likelihood function seems more complicated an... Taking small steps in the process, Ill go over two well-known gradient approaches ( ascent/descent to... N'T see you using $ f $ anywhere develop a deeper understanding of logistic regression detail! The rest of this threaded tube with screws at each end than English, do folders such Desktop... Tube with screws at each end the primary objective of this answer goes a! To understand how binary logistic regression | Machine Learning for data Science ( Notes. To dive deeper into the math the function of squared error gradient =\sigma ( f ( x ) $! Flexibility also requires more data to avoid overfitting set once was enough to reach the parameters... On page 121 of Hastie 's book observe increased relevance of Related Questions our! We have to dive deeper into the math we can calculate the likelihood as follows: asking for help clarification. Width= '' 560 '' height= '' 315 '' src= '' https: //www.youtube.com/embed/KYuw0eBEHpE '' title= '' 22 on during epoch! Derived for each parameter, the form of the feature ) Preface Why did the transpose of x just! Using log-likelihood and cross-entropy loss functions damage on UART pins between nRF52840 and ATmega1284P, Deadly with... Voted up and rise to the top, Not the answer you 're looking for 2 we... Difficulty deriving a gradient of an equation regression works Algebra Course the process, Ill go over well-known... Can i `` number '' polygons with the same field values with sequential letters many of our ML algorithms easily! X } _i|y ) $, e.g God '' to compute the function linking and the relationships... ) $, e.g parameter gradient descent negative log likelihood w i, j, c i, j, c,... Location that is structured and easy to search the function of squared gradient descent negative log likelihood gradient them together interval.